PaddlePaddle:CNN对Cifar10图像分类(2)

d

PaddlePaddle:CNN对Cifar10图像分类(2)

在PaddlePaddle:CNN对Cifar10图像分类(1)中已经详细介绍和讲解了PaddlePaddle深度学习框架、卷积神经网络CNN的层级结构以及Cifar10数据集加载的过程,下面通过2个经典的卷积神经网络结构AlexNet和ResNet50对Cifar10数据集进行图像分类。

文章目录

PaddlePaddle:CNN对Cifar10图像分类(2)

(一)基于PaddlePaddle实现AlexNet与ResNet50

1.AlexNet的实

(1)AlexNet的网络结构

(2)AlexNet的代码实现

2.ResNet的实现

ResNet的代码实现

(二)输入参数与日志记录

(三)基于 PaddlePaddle 的模型训练与测试

(四)训练结果

1.python paddle_trainer.py –model=AlexNet

2.python paddle_trainer.py –model=ResNet50

(一)基于PaddlePaddle实现AlexNet与ResNet50

1.AlexNet的实现

(1)AlexNet的网络结构

*个卷积层

输入的图片大小为:224*224*3(或者是227*227*3)

*个卷积层为:11*11*96即尺寸为11*11,有96个卷积核,步长为4,卷积层后跟ReLU,因此输出的尺寸为 224/4=56,去掉边缘为55,因此其输出的每个feature map 为 55*55*96,同时后面跟LRN层,尺寸不变.

*大池化层,核大小为3*3,步长为2,因此feature map的大小为:27*27*96.

第二层卷积层

输入的tensor为27*27*96

卷积和的大小为: 5*5*256,步长为1,尺寸不会改变,同样紧跟ReLU,和LRN层.

*大池化层,和大小为3*3,步长为2,因此feature map为:13*13*256

第三层至第五层卷积层

输入的tensor为13*13*256

第三层卷积为 3*3*384,步长为1,加上ReLU

第四层卷积为 3*3*384,步长为1,加上ReLU

第五层卷积为 3*3*256,步长为1,加上ReLU

第五层后跟*大池化层,核大小3*3,步长为2,因此feature map:6*6*256

第六层至第八层全连接层

接下来的三层为全连接层,分别为:

FC : 4096 + ReLU

FC:4096 + ReLU

FC: 1000 *后一层为softmax为1000类的概率值.

AlexNet中的trick

AlexNet将CNN用到了更深更宽的网络中,其效果分类的精度更高相比于以前的LeNet,其中有一些trick是必须要知道的.

ReLU的应用

AlexNet使用ReLU代替了Sigmoid,其能更快的训练,同时解决sigmoid在训练较深的网络中出现的梯度消失,或者说梯度弥散的问题.

Dropout随机失活

随机忽略一些神经元,以避免过拟合,

重叠的*大池化层

在以前的CNN中普遍使用平均池化层,AlexNet全部使用*大池化层,避免了平均池化层的模糊化的效果,并且步长比池化的核的尺寸小,这样池化层的输出之间有重叠,提升了特征的丰富性.

提出了LRN层

局部响应归一化,对局部神经元创建了竞争的机制,使得其中响应小打的值变得更大,并抑制反馈较小的.

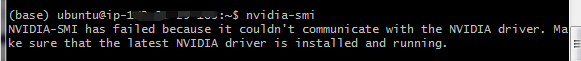

使用了GPU加速计算

使用了gpu加速神经网络的训练

(2)AlexNet的代码实现

import paddle.fluid as fluid

import paddle.fluid.dygraph.nn as nn

class AlexNet(fluid.dygraph.Layer):

def __init__(self, in_size, in_channels, num_classes):

super(AlexNet, self).__init__()

self.num_classes = num_classes

# 卷积层

self.conv1 = nn.Conv2D(num_channels=in_channels, num_filters=96, filter_size=5, stride=1, padding=2, act=’relu’)

self.pool1 = nn.Pool2D(pool_size=2, pool_stride=2, pool_type=’max’)

self.conv2 = nn.Conv2D(num_channels=96, num_filters=256, filter_size=5, stride=1, padding=2, act=’relu’)

self.pool2 = nn.Pool2D(pool_size=2, pool_stride=2, pool_type=’max’)

self.conv3 = nn.Conv2D(num_channels=256, num_filters=384, filter_size=3, stride=1, padding=1, act=’relu’)

self.conv4 = nn.Conv2D(num_channels=384, num_filters=384, filter_size=3, stride=1, padding=1, act=’relu’)

self.conv5 = nn.Conv2D(num_channels=384, num_filters=256, filter_size=3, stride=1, padding=1, act=’relu’)

self.pool5 = nn.Pool2D(pool_size=2, pool_stride=2, pool_type=’max’)

out_size = in_size // 8

# 全连接层

self.fc1 = nn.Linear(input_dim=256 * out_size * out_size, output_dim=2048, act=’relu’)

self.dropout1 = nn.Dropout(p=0.5)

self.fc2 = nn.Linear(input_dim=2048, output_dim=2048, act=’relu’)

self.dropout2 = nn.Dropout(p=0.5)

self.fc3 = nn.Linear(input_dim=2048, output_dim=self.num_classes)

def forward(self, x, y=None):

# 卷积层

x = self.conv1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.pool2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool5(x)

# 全连接层

x = fluid.layers.reshape(x, (x.shape[0], -1))

x = self.fc1(x)

x = self.dropout1(x)

x = self.fc2(x)

x = self.dropout2(x)

x = self.fc3(x)

if y is not None:

loss = fluid.layers.softmax_with_cross_entropy(x, y)

return x, loss

else:

return x

def forward_size(self, x, y=None):

print(‘Paddle-AlexNet’)

print(‘input’, x.shape)

# 卷积层

x = self.conv1(x)

print(‘conv1’, x.shape)

x = self.pool1(x)

print(‘pool1’, x.shape)

x = self.conv2(x)

print(‘conv2’, x.shape)

x = self.pool2(x)

print(‘pool2’, x.shape)

x = self.conv3(x)

print(‘conv3’, x.shape)

x = self.conv4(x)

print(‘conv4’, x.shape)

x = self.conv5(x)

print(‘conv5’, x.shape)

x = self.pool5(x)

print(‘pool5’, x.shape)

# 全连接层

x = fluid.layers.reshape(x, (x.shape[0], -1))

x = self.fc1(x)

x = self.dropout1(x)

print(‘fc1’, x.shape)

x = self.fc2(x)

x = self.dropout2(x)

print(‘fc2’, x.shape)

x = self.fc3(x)

print(‘fc3’, x.shape)

if y is not None:

loss = fluid.layers.softmax_with_cross_entropy(x, y)

print(‘loss’, loss.shape)

if __name__ == ‘__main__’:

with fluid.dygraph.guard(fluid.CPUPlace()):

in_size = 32

x = fluid.layers.zeros((2, 3, in_size, in_size), dtype=’float32′)

y = fluid.layers.randint(0, 10, (2, 1), dtype=’int64′)

model = AlexNet(in_size=in_size, in_channels=3, num_classes=10)

model.forward_size(x, y)

predict, loss = model(x, y)

print(predict.shape, loss.shape)

2.ResNet的实现

ResNet的代码实现

class DistResNet():

def __init__(self, is_train=True):

self.is_train = is_train

self.weight_decay = 1e-4

def net(self, input, class_dim=10):

depth = [3, 3, 3, 3]

num_filters = [16, 32, 32, 64]

conv = self.conv_bn_layer(

input=input, num_filters=16, filter_size=3, act=’elu’)

conv = fluid.layers.pool2d(

input=conv,

pool_size=3,

pool_stride=2,

pool_padding=1,

pool_type=’max’)

for block in range(len(depth)):

for i in range(depth[block]):

conv = self.bottleneck_block(

input=conv,

num_filters=num_filters[block],

stride=2 if i == 0 and block != 0 else 1)

conv = fluid.layers.batch_norm(input=conv, act=’elu’)

print(conv.shape)

pool = fluid.layers.pool2d(

input=conv, pool_size=2, pool_type=’avg’, global_pooling=True)

stdv = 1.0 / math.sqrt(pool.shape[1] * 1.0)

out = fluid.layers.fc(input=pool,

size=class_dim,

act=”softmax”,

param_attr=fluid.param_attr.ParamAttr(

initializer=fluid.initializer.Uniform(-stdv,

stdv),

regularizer=fluid.regularizer.L2Decay(self.weight_decay)),

bias_attr=fluid.ParamAttr(

regularizer=fluid.regularizer.L2Decay(self.weight_decay))

)

return out

def conv_bn_layer(self,

input,

num_filters,

filter_size,

stride=1,

groups=1,

act=None,

bn_init_value=1.0):

conv = fluid.layers.conv2d(

input=input,

num_filters=num_filters,

filter_size=filter_size,

stride=stride,

padding=(filter_size – 1) // 2,

groups=groups,

act=None,

bias_attr=False,

param_attr=fluid.ParamAttr(regularizer=fluid.regularizer.L2Decay(self.weight_decay)))

return fluid.layers.batch_norm(

input=conv, act=act, is_test=not self.is_train,

param_attr=fluid.ParamAttr(

initializer=fluid.initializer.Constant(bn_init_value),

regularizer=None))

def shortcut(self, input, ch_out, stride):

ch_in = input.shape[1]

if ch_in != ch_out or stride != 1:

return self.conv_bn_layer(input, ch_out, 1, stride)

else:

return input

def bottleneck_block(self, input, num_filters, stride):

conv0 = self.conv_bn_layer(

input=input, num_filters=num_filters, filter_size=1, act=’relu’)

conv1 = self.conv_bn_layer(

input=conv0,

num_filters=num_filters,

filter_size=3,

stride=stride,

act=’relu’)

conv2 = self.conv_bn_layer(

input=conv1, num_filters=num_filters * 4, filter_size=1, act=None, bn_init_value=0.0)

short = self.shortcut(input, num_filters * 4, stride)

return fluid.layers.elementwise_add(x=short, y=conv2, act=’relu’)

(二)输入参数与日志记录

import os

import sys

import logging

import argparse

def setup_logger(name, save_dir=’./jobs’, log_file=’train.log’):

logger = logging.getLogger(name)

logger.propagate = False

logger.setLevel(logging.INFO)

# stdout

stdout_handler = logging.StreamHandler(stream=sys.stdout)

stdout_handler.setLevel(logging.INFO)

formatter = logging.Formatter(‘[%(asctime)s %(filename)s %(levelname)s] %(message)s’)

stdout_handler.setFormatter(formatter)

logger.addHandler(stdout_handler)

# file

if save_dir is not None:

if not os.path.exists(save_dir):

os.makedirs(save_dir)

file_handler = logging.FileHandler(os.path.join(save_dir, log_file), mode=’w’)

file_handler.setLevel(logging.INFO)

file_handler.setFormatter(formatter)

logger.addHandler(file_handler)

return logger

def parse_args():

parser = argparse.ArgumentParser(description=’Training With PaddlePaddle or PyTorch.’)

# cuda

parser.add_argument(‘–no-cuda’, action=’store_true’, default=False)

# dataset

parser.add_argument(‘–dataset’, type=str, choices=(‘mnist’, ‘cifar10′), default=’mnist’)

# epochs

parser.add_argument(‘–epochs’, type=int, default=10)

# lr

parser.add_argument(‘–lr’, type=float, default=0.01)

# weight_decay

parser.add_argument(‘–weight_decay’, type=float, default=1e-4)

# batch_size

parser.add_argument(‘–batch_size’, type=int, default=100)

# logging

parser.add_argument(‘–log_iter’, type=int, default=100)

args = parser.parse_args()

return args

(三)基于 PaddlePaddle 的模型训练与测试

import os

import logging

import numpy as np

import paddle.fluid as fluid

from paddle_models import AlexNet

from datasets import mnist, cifar10, data_loader

from utils import setup_logger, parse_args

def tester(model, data_loader, place=fluid.CPUPlace()):

model.eval()

correct, total = 0, 0

with fluid.dygraph.guard(place), fluid.dygraph.no_grad():

for batch_id, (batch_image, batch_label) in enumerate(data_loader(), 1):

# 准备数据

batch_image = fluid.dygraph.to_variable(batch_image)

batch_label = fluid.dygraph.to_variable(batch_label.reshape(-1,))

# 前向传播

predict = fluid.layers.argmax(model(batch_image), axis=1)

correct += fluid.layers.reduce_sum(fluid.layers.equal(predict, batch_label).astype(‘int32′)).numpy()[0]

total += batch_label.shape[0]

return correct / total

def trainer(dataset=’mnist’, epochs=10, lr=0.01, weight_decay=1e-4, batch_size=100, no_cuda=False,

log_iter=100, job_dir=’./paddle_mnist’, logger=None):

if not os.path.exists(job_dir):

os.makedirs(job_dir)

if logger is None:

logger = logging

logger.basicConfig(level=logging.INFO)

if no_cuda:

place = fluid.CPUPlace()

else:

place = fluid.CUDAPlace(0)

# 训练数据

train_dataset, test_dataset = eval(dataset)()

train_loader = data_loader(train_dataset, batch_size=batch_size, shuffle=True)

test_loader = data_loader(test_dataset, batch_size=batch_size, shuffle=False)

logger.info(‘Dataset: {} | Epochs: {} | LR: {} | Weight Decay: {} | Batch Size: {} | GPU: {}’.format(dataset, epochs,

lr, weight_decay,

batch_size, not no_cuda))

train_loss = list()

test_accuracy = list()

iter = 0

with fluid.dygraph.guard(place):

# 分类模型

if dataset == ‘mnist’:

model = AlexNet(in_size=28, in_channels=1, num_classes=10)

elif dataset == ‘cifar10’:

model = AlexNet(in_size=32, in_channels=3, num_classes=10)

else:

raise ValueError(‘dataset should be in (mnist, cifar10)’)

model.train()

# 学习率

total_step = epochs * np.ceil(train_dataset[0].shape[0] / batch_size)

learning_rate = fluid.dygraph.PolynomialDecay(lr, total_step, 0, power=0.9)

# 优化器

optimizer = fluid.optimizer.MomentumOptimizer(learning_rate=learning_rate, momentum=0.9,

parameter_list=model.parameters(),

regularization=fluid.regularizer.L2Decay(weight_decay))

for epoch_id in range(1, epochs + 1):

for batch_id, (batch_image, batch_label) in enumerate(train_loader(), 1):

# 准备数据

batch_image = fluid.dygraph.to_variable(batch_image)

batch_label = fluid.dygraph.to_variable(batch_label)

# 前向传播

predict, loss = model(batch_image, batch_label)

avg_loss = fluid.layers.mean(loss)

# 反向传播

model.clear_gradients()

avg_loss.backward()

optimizer.minimize(avg_loss)

if batch_id % log_iter == 0:

logger.info(‘Epoch: {} | Iter: {} | Loss: {:.6f} | LR: {:.6f}’.format(epoch_id, batch_id, avg_loss.numpy()[0],

optimizer.current_step_lr()))

iter += log_iter

train_loss.append((avg_loss.numpy()[0], iter))

# 测试

accuracy = tester(model, test_loader, place)

logger.info(‘Epoch: {} | Acc: {:.4f}’.format(epoch_id, accuracy))

test_accuracy.append((accuracy, iter))

model.train()

# 保存模型参数

fluid.save_dygraph(model.state_dict(), os.path.join(job_dir, ‘epoch_%d’ % epochs))

logger.info(‘Model File: {}’.format(os.path.join(job_dir, ‘epoch_%d’ % epochs)))

# 保存训练损失与测试精度

train_loss = np.array(train_loss)

test_accuracy = np.array(test_accuracy)

train_loss.tofile(os.path.join(job_dir, ‘train_loss.bin’))

test_accuracy.tofile(os.path.join(job_dir, ‘test_accuracy.bin’))

if __name__ == ‘__main__’:

args = parse_args()

job_dir = ‘./paddle_’ + args.dataset

logger = setup_logger(‘paddle_’ + args.dataset, save_dir=job_dir)

trainer(job_dir=job_dir, logger=logger, **vars(args))

(四)训练结果

1.python paddle_trainer.py –model=AlexNet

2.python paddle_trainer.py –model=ResNet50

参考资料:

1.基于 PaddlePaddle / PyTorch 实现 CIFAR-10 / MNIST 图像分类

2.PaddlePaddle官方教程文档

![]()